This is the Linux app named Autograd whose latest release can be downloaded as 1.0.zip. It can be run online in the free hosting provider OnWorks for workstations.

Download and run online this app named Autograd with OnWorks for free.

Follow these instructions in order to run this app:

- 1. Downloaded this application in your PC.

- 2. Enter in our file manager https://www.onworks.net/myfiles.php?username=XXXXX with the username that you want.

- 3. Upload this application in such filemanager.

- 4. Start the OnWorks Linux online or Windows online emulator or MACOS online emulator from this website.

- 5. From the OnWorks Linux OS you have just started, goto our file manager https://www.onworks.net/myfiles.php?username=XXXXX with the username that you want.

- 6. Download the application, install it and run it.

SCREENSHOTS

Ad

Autograd

DESCRIPTION

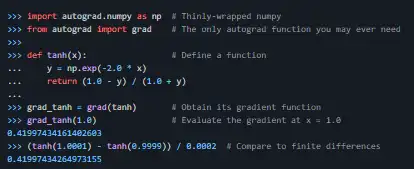

Autograd can automatically differentiate native Python and Numpy code. It can handle a large subset of Python's features, including loops, ifs, recursion and closures, and it can even take derivatives of derivatives of derivatives. It supports reverse-mode differentiation (a.k.a. backpropagation), which means it can efficiently take gradients of scalar-valued functions with respect to array-valued arguments, as well as forward-mode differentiation, and the two can be composed arbitrarily. The main intended application of Autograd is gradient-based optimization. For more information, check out the tutorial and the examples directory. We can continue to differentiate as many times as we like, and use numpy's vectorization of scalar-valued functions across many different input values.

Features

- Simple neural net

- Convolutional neural net

- Recurrent neural net

- LSTM

- Neural Turing Machine

- Backpropagating through a fluid simulation

Programming Language

Python

This is an application that can also be fetched from https://sourceforge.net/projects/autograd.mirror/. It has been hosted in OnWorks in order to be run online in an easiest way from one of our free Operative Systems.