This is the Linux app named LLM Foundry whose latest release can be downloaded as v0.3.0.zip. It can be run online in the free hosting provider OnWorks for workstations.

Download and run online this app named LLM Foundry with OnWorks for free.

Follow these instructions in order to run this app:

- 1. Downloaded this application in your PC.

- 2. Enter in our file manager https://www.onworks.net/myfiles.php?username=XXXXX with the username that you want.

- 3. Upload this application in such filemanager.

- 4. Start the OnWorks Linux online or Windows online emulator or MACOS online emulator from this website.

- 5. From the OnWorks Linux OS you have just started, goto our file manager https://www.onworks.net/myfiles.php?username=XXXXX with the username that you want.

- 6. Download the application, install it and run it.

SCREENSHOTS:

LLM Foundry

DESCRIPTION:

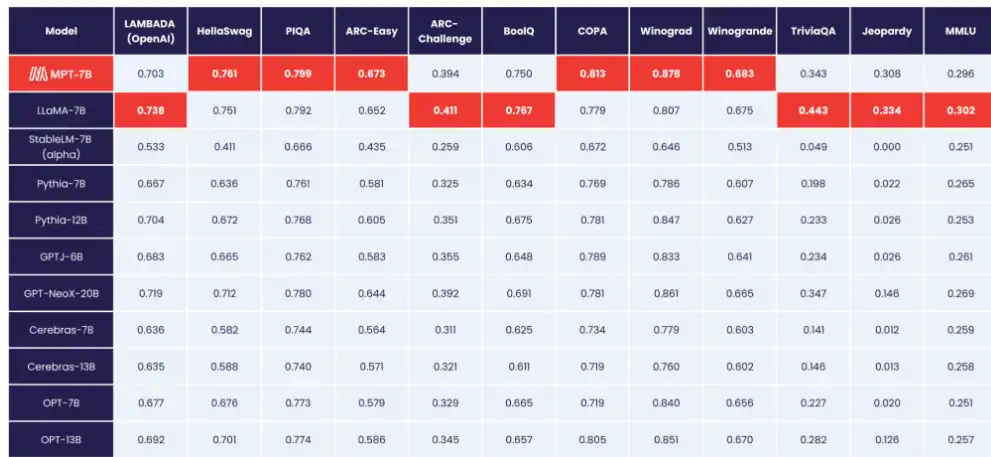

Introducing MPT-7B, the first entry in our MosaicML Foundation Series. MPT-7B is a transformer trained from scratch on 1T tokens of text and code. It is open source, available for commercial use, and matches the quality of LLaMA-7B. MPT-7B was trained on the MosaicML platform in 9.5 days with zero human intervention at a cost of ~$200k. Large language models (LLMs) are changing the world, but for those outside well-resourced industry labs, it can be extremely difficult to train and deploy these models. This has led to a flurry of activity centered on open-source LLMs, such as the LLaMA series from Meta, the Pythia series from EleutherAI, the StableLM series from StabilityAI, and the OpenLLaMA model from Berkeley AI Research.

Features

- Licensed for commercial use (unlike LLaMA)

- Trained on a large amount of data (1T tokens like LLaMA vs. 300B for Pythia, 300B for OpenLLaMA, and 800B for StableLM)

- Prepared to handle extremely long inputs thanks to ALiBi (we trained on up to 65k inputs and can handle up to 84k vs. 2k-4k for other open source models)

- Optimized for fast training and inference (via FlashAttention and FasterTransformer)

- Equipped with highly efficient open-source training code

- MPT-7B Base is a decoder-style transformer with 6.7B parameters

Programming Language

Python

Categories

This is an application that can also be fetched from https://sourceforge.net/projects/llm-foundry.mirror/. It has been hosted in OnWorks in order to be run online in an easiest way from one of our free Operative Systems.