Welcome to OnWorks

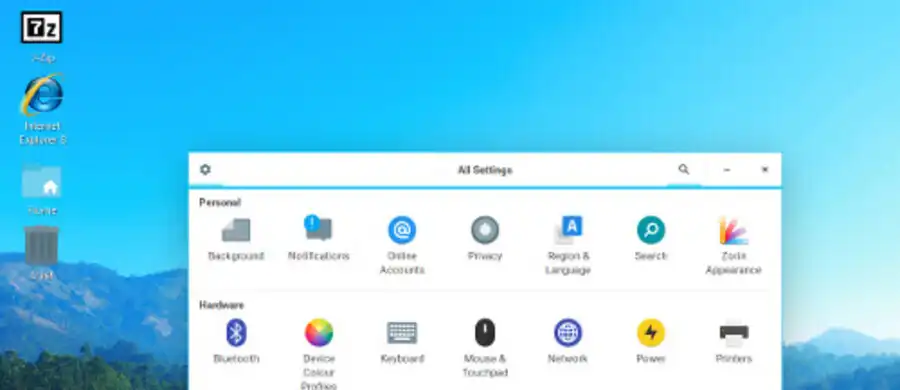

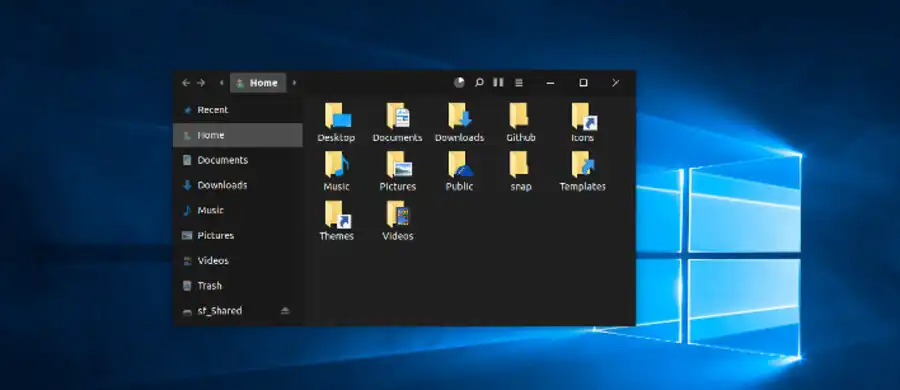

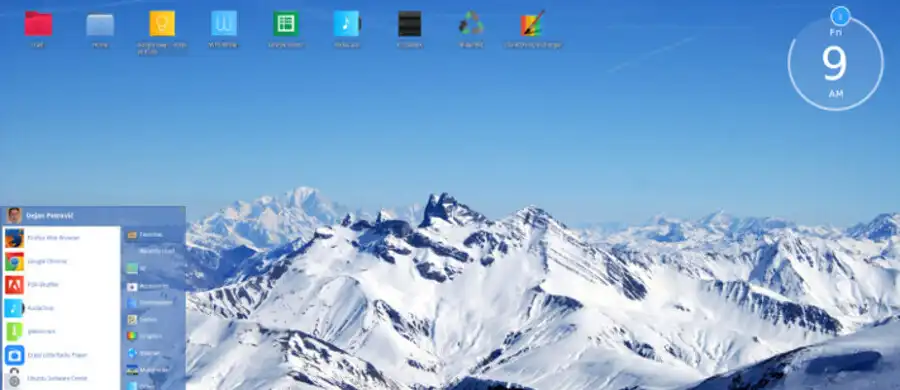

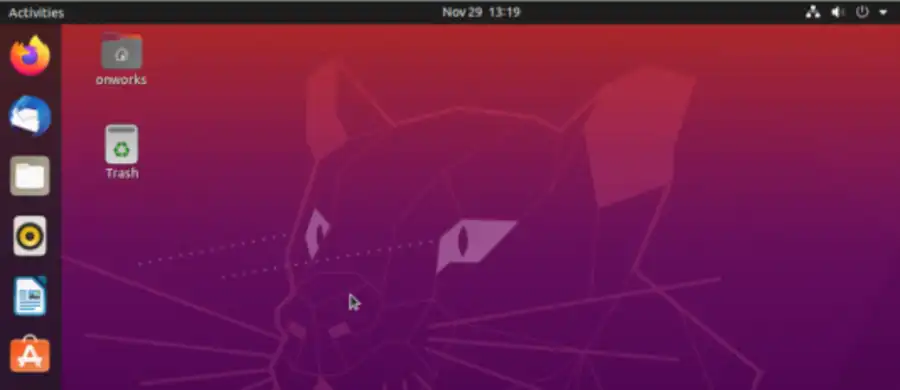

OnWorks is a free hosting provider that allows to run your workstations only using your web browser. Our workstations can be based on a lot of kind of Operative Systems like CentOS, Fedora, Ubuntu and Debian. OnWorks is a multi-device platform so that our customers can run and test any type of OS from everywhere. It is simple, full of features, light and easy to use by our customers. OnWorks is your cloud computing provider where you can enjoy a lot of type of workstations, and run them free of charge. The workstations are installed with SW for Office, Graphics, Videos, Games and so on.

Ad